Making Decentralized Social Easy

Getting started building on Decentralized Social is as easy as deploying a Web2 API.

Build What You Want

Frequency Developer Gateway offers a suite of tools you can pick and choose from to build the best applications for your users.

- Add decentralized authentication and onboarding workflows

- Connect your users with their universal social graph

- Read, write, and interact with social media content

- More coming...

Web2 API Simplicity with Decentralized Power

- Build your applications faster

- Own your infrastructure

- OpenAPI/Swagger out of the box

- Optimized Docker images

Basic Architecture

Frequency Developer Gateway provides a simple API to interact with the Frequency social layers of identity, content, and more.

These microservices are completely independent of one another, so you can use only those pieces you want or need.

Key Microservices

Account Service

The Account Service enables easy interaction with accounts on Frequency.

Accounts are defined as an msaId (64-bit identifier) and can contain additional information such as a handle, keys, and more.

- Account authentication and creation using SIWF

- Delegation management

- User Handle creation and retrieval

- User key retrieval and management

Content Publishing Service

The Content Publishing Service enables the creation of new content-related activity on Frequency.

- Create posts to publicly broadcast

- Create replies to posts

- Create reactions to posts

- Create updates to existing content

- Request deletion of content

- Store and attach media with IPFS

Content Watcher Service

The Content Watcher Service enables client applications to process content found on Frequency by registering for webhook notifications, triggered when relevant content is found, eliminating the need to interact with the chain for new content.

- Parses and validates Frequency content

- Filterable webhooks

- Scanning control

Get Started

Getting Started

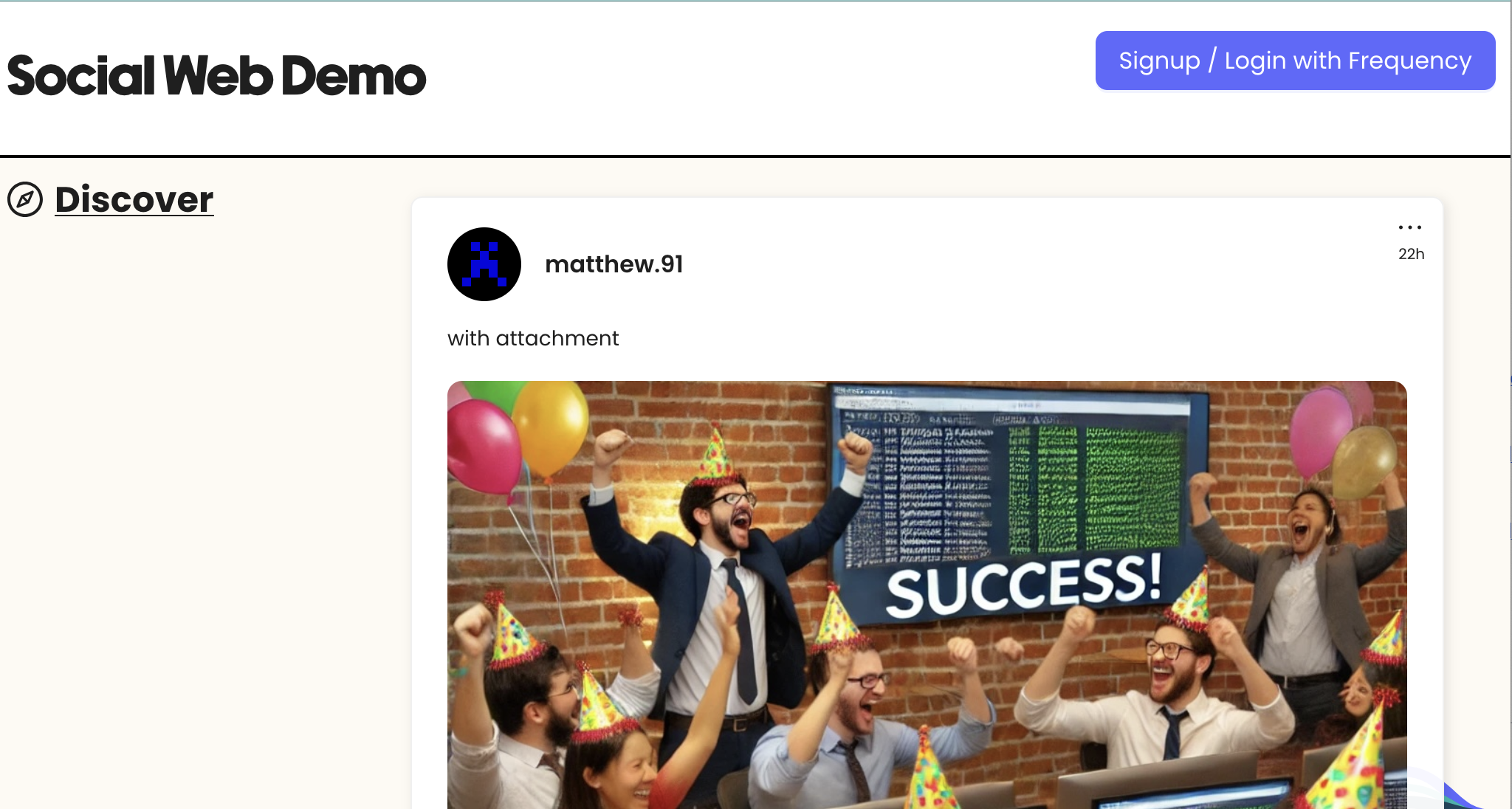

In this tutorial, you will setup the Social App Template Example Application that uses Gateway Services. These will all run locally and connect to the public Frequency Testnet. This will give you a quick introduction to a working integration with Gateway Services and a starting place to explore the possibilities.

Expected Time: ~5 minutes

Version Compatibility: This guide uses Gateway Services v1.5+ which includes Sign In With Frequency (SIWF) v2 support, enhanced credential management with W3C Verifiable Credentials, and improved microservice architecture with dedicated worker services. If you're using the Social App Template, ensure it's compatible with Gateway v1.5+.

Step 1: Prerequisites

Before you begin, ensure you have the following installed on your machine:

- Git

- Docker

- Node.js (v22 or higher recommended)

- A Web3 Polkadot wallet (e.g. Polkadot extension)

Note: This setup uses Sign In With Frequency (SIWF) v2, which integrates with Frequency Access to provide easy, custodial wallet authentication for your users. SIWF v1 is deprecated. Learn more in the SSO Guide.

Step 2: Register on Testnet

To have your application interact on Frequency Testnet, you will need to register as a Provider. This will enable users to delegate to you, and you can use Capacity to fund your chain actions.

Create an Application Account in a Wallet

- Open a wallet extension such as the Polkadot extension

- Follow account creation steps

- Make sure to keep the seed phrase for the service configuration step

Acquire Testnet Tokens

Visit the Frequency Testnet Faucet and get tokens: Testnet Faucet

Create a Provider

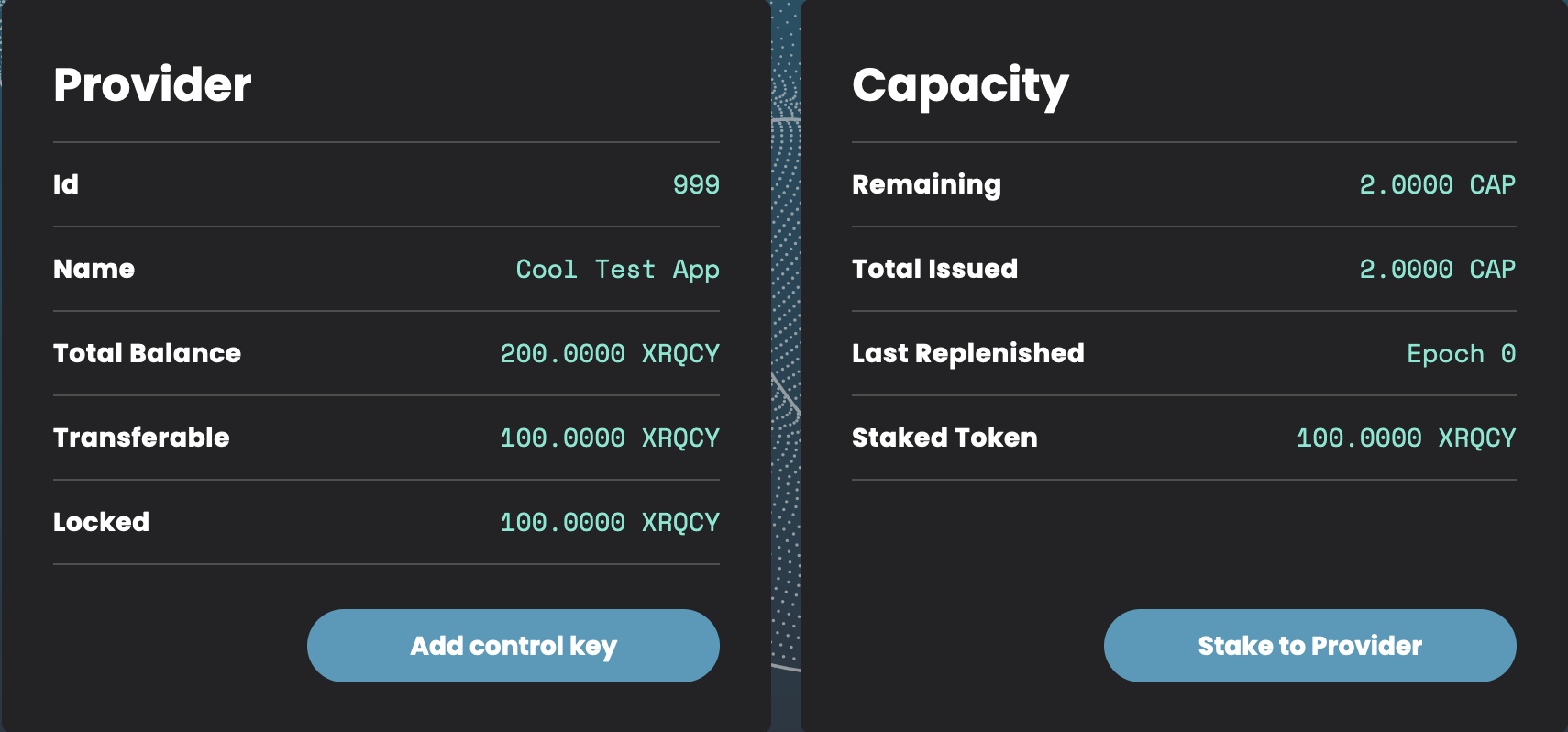

Creating your provider account is easy via the Provider Dashboard.

- Use the same browser with the wallet extension

- Visit the Provider Dashboard

- Select

Become a Provider - Select the

Testnet Paseonetwork - Connect the Application Account created earlier

- Select

Create an MSAand approve the transaction popups - Choose a public Provider name (e.g. "Cool Test App") and continue via

Create Provider - Stake for Capacity by selecting

Stake to Providerand stake 100 XRQCY Tokens

Step 3: Configure and Run the Example

Clone the Example Repository

git clone https://github.com/ProjectLibertyLabs/social-app-template.git

cd social-app-template

Run the Configuration Script

./start.sh

Testnet Setup Help

Use default values when uncertain.

Do you want to start on Frequency Paseo Testnet?Yes!Enter Provider IDThis is Provider Id from the Provider DashboardEnter Provider Seed PhraseThis is the seed phrase saved from the wallet setupDo you want to change the IPFS settings?- No, if this is just a test run

- Yes, if you want to use an IPFS pinning service

Configuration Note: The setup automatically configures SIWF v2 authentication with Frequency Access. The Frequency RPC endpoint used is

wss://0.rpc.testnet.amplica.iofor Testnet Paseo.

Step 4: Done & What Happened?

You should now be able to access the Social App Template at http://localhost:3000!

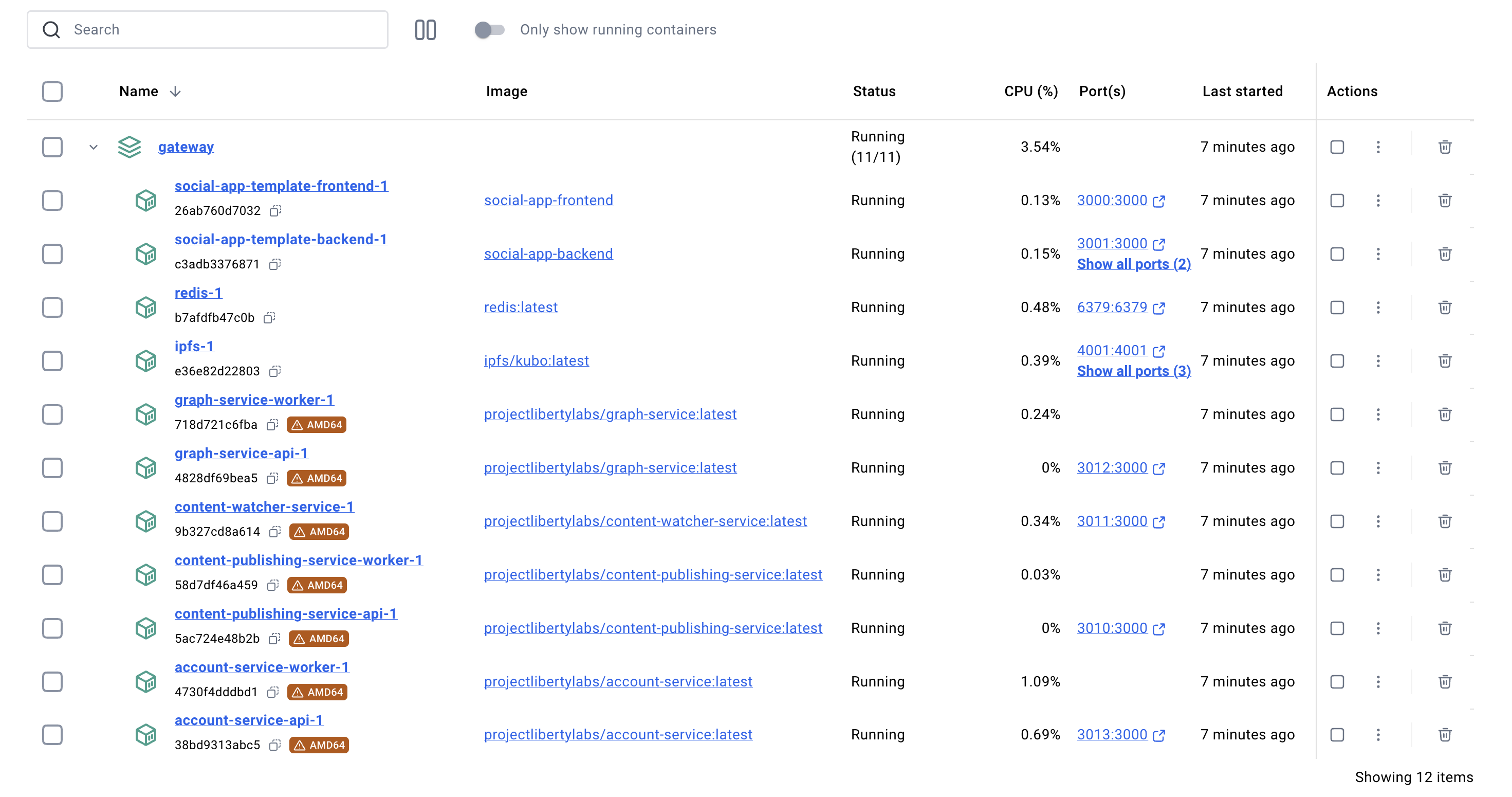

What happened in the background?

All the different services needed were started in Docker. Gateway Services consists of 7 microservices working together:

- Account API & Worker: User account management and authentication with SIWF v2

- Content Publishing API & Worker: Content creation and publishing to blockchain

- Content Watcher: Monitors blockchain for content changes and updates

Each service has dedicated API and worker components for better scalability and performance.

Step 5: Shutdown

Stop all the Docker services via the script (with the option to remove saved data), or just use Docker Desktop.

./stop.sh

What's Next?

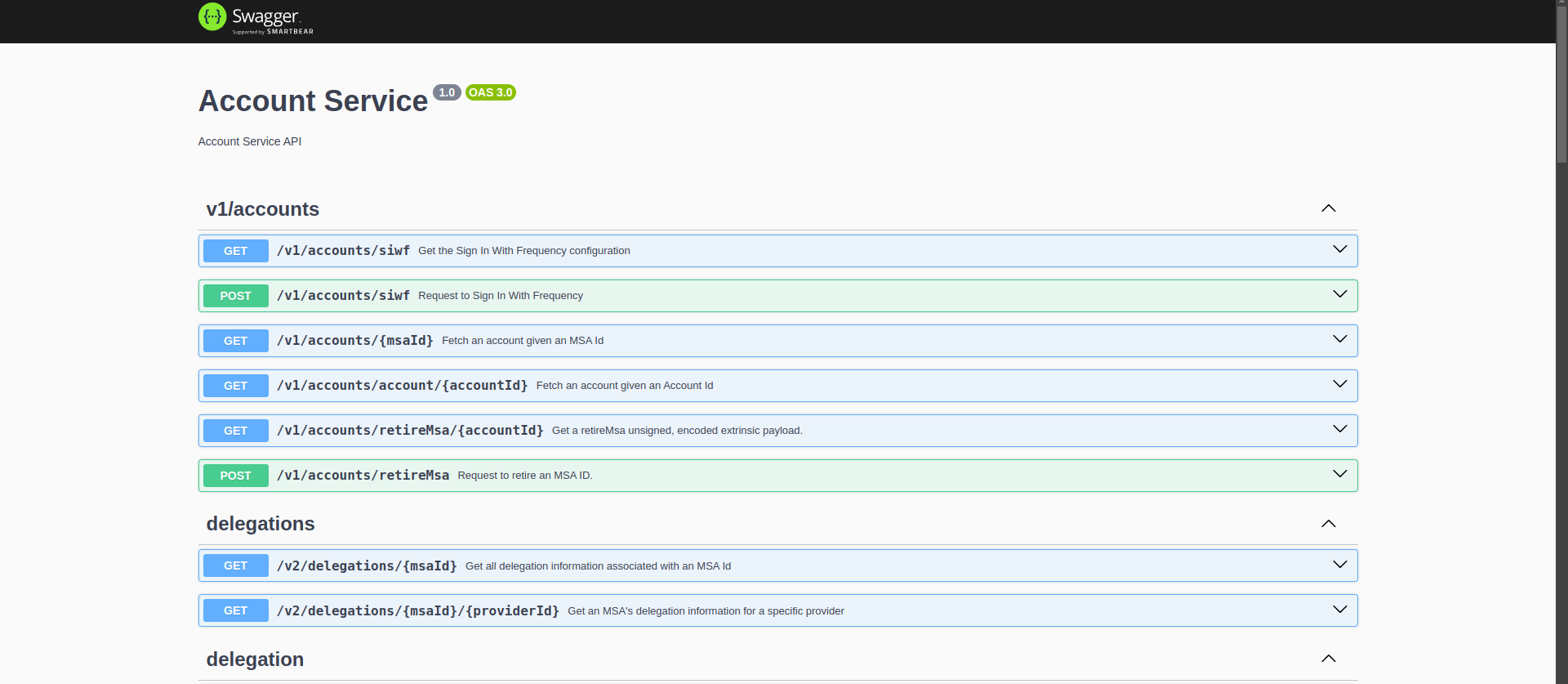

Explore the API Documentation

Open the OpenAPI/Swagger Documentation for each service:

- Account Service: Local | Public

- Account Worker: Public Docs

- Content Publishing Service: Local | Public

- Content Publishing Worker: Public Docs

- Content Watcher Service: Local | Public

Learn More

- Learn about each service

- Read about Running in Production

- SIWF v2 Documentation - Deep dive into authentication

- Frequency Access - User-friendly custodial wallet

- Troubleshooting Guide - Common issues and solutions

New Features in Gateway v1.5+

- SIWF v2 Authentication: Enhanced security and user experience with Frequency Access integration

- W3C Verifiable Credentials: Support for email, phone number, and private graph key credentials

- 7 Microservices Architecture: Complete suite including dedicated worker services for improved scalability

- Enhanced Webhooks: Real-time updates for account changes

- Intent-Based Permissions: Granular delegation control based on Frequency Intents

- Improved Performance: Optimized background job processing with BullMQ workers

Frequency Developer Gateway Guides

Quick Start

Walk through all the steps to get Gateway and the Social Application Template running in 5 minutes.

Single Sign-On

Learn how to use the Frequency Developer Gateway to quickly add Sign In With Frequency (SIWF) v2 authentication to your application:

- Frequency Access (SIWF v2): An easy-to-use custodial social wallet for users on Frequency with support for W3C Verifiable Credentials

Sign In With Frequency v1(deprecated)

SIWF v2 provides enhanced security, better user experience, and support for verified credentials including email, phone numbers, and private graph keys.

Become a Provider

Learn how to setup your Provider Account to represent your application on Frequency.

Become a Provider

A Provider is a special kind of user account on Frequency, capable of executing certain operations on behalf of other users (delegators). Any organization wishing to deploy an application that will act on behalf of users must first register as a Provider. This guide will walk you through the steps to becoming a Provider on the Frequency Testnet. See How to Become a Provider on Mainnet, if you are ready to move to production.

Step 1: Generate Your Keys

There are various wallets that can generate and secure Frequency compatible keys, including:

This onboarding process will guide you through the creation of an account and the creation of a Provider Control Key which will be required for many different transactions.

Step 2: Acquire Testnet Tokens

Taking the account generated in Step 1, visit the Frequency Testnet Faucet and get tokens: Testnet Faucet

Step 3: Create a Testnet Provider

Creating your provider account is easy via the Provider Dashboard.

- Visit the Provider Dashboard

- Select

Become a Provider - Select the

Testnet Paseonetwork - Connect the Application Account created earlier

- Select

Create an MSAand approve the transaction popups - Choose a public Provider name (e.g. "Cool Test App") and continue via

Create Provider

Step 4: Gain Capacity

Capacity is the ability to perform some transactions without token cost. All interactions with the chain that an application does on behalf of a user can be done with Capacity.

In the Provider Dashboard, login and select Stake to Provider and stake 100 XRQCY Tokens.

Step 5: Done!

You are now registered as a Provider on Testnet and have Capacity to do things like support users with Single Sign On.

You can also use the Provider Dashboard to add additional Control Keys for safety.

Ready to Become a Provider on Mainnet? { #mainnet }

Want to make the next step to becoming a Provider on Mainnet?

- Securely generate a Frequency Mainnet Account

- Backup your seed phrase for the account.

- Acquire a small amount of FRQCY tokens.

- Complete the registration with the generated Frequency Mainnet Account via the Provider Dashboard.

The registration process is currently gated to prevent malicious Providers.

Fast Single Sign On with SIWF v2 with Account Service

Sign In With Frequency (SIWF v2) is quick, user-friendly, decentralized authentication using the Frequency blockchain. Coupled with the Account Service, this provides fast and secure SSO across applications by utilizing cryptographic signatures to verify user identities without complex identity management systems.

Key benefits:

- Decentralized authentication

- Integration with the identity system on Frequency

- Support for multiple credentials (e.g., email, phone)

- Secure and fast user onboarding

Resources:

Setup Tutorial

In this tutorial, you will set up a Sign In With Frequency button for use with Testnet, which will enable you to acquire onboarded, authenticated users with minimal steps.

Prerequisites

Before proceeding, ensure you have completed the following steps:

Registered as a Provider Register your application as a Provider on Frequency Testnet.

Completed the Access Form Fill out the Frequency Access Testnet Account Setup form.

Set Up a Backend Instance You need a backend-only-accessible running instance of the Account Service.

Access to a Frequency RPC Node

- Public Testnet Node:

wss://0.rpc.testnet.amplica.io

Overview

- Application creates a signed request SIWF URL that contains a callback URL.

- User clicks a button that uses the signed request URL.

- User visits the SIWF v2 compatible service (e.g. Frequency Access).

- User is processed by the service.

- User returns with the callback URL.

- Application has the Account Service validate and process registration on Frequency if needed.

- User is authenticated.

sequenceDiagram

participant User

participant App

participant Account Service

participant SIWF Service

participant Frequency

User ->> App: Requests login

App ->> SIWF Service: Signed request

SIWF Service ->> User: Authentication steps

User ->> SIWF Service : Authenticate

SIWF Service ->> App: Returns authorization code

App ->> Account Service: Process authorization

Account Service ->> Frequency: Register user changes, if any

Account Service ->> App: User authenticated

App ->> User: User logged in

Step 1: Generate a SIWF v2 Signed Request

The User will be redirected to a service for generating their signed authentication.

Option A: Static Callback and Permissions

If a static callback and permissions are all that is required, a static Signed Request may be generated and used: Signed Request Generator Tool

Option B: Dynamic Callback or Permissions

A dynamic signed request allows for user-specific callbacks. While this is not needed for most applications, some situations require it.

The Account Service provides an API to generate the Signed Request URL:

curl -X GET "https://account-service.internal/v2/accounts/siwf?callbackUrl=https://app.example.com/callback"

Selecting Permissions for Delegation

Permissions define the actions that you as the Application can perform on behalf of the user. They are based on Schemas published to Frequency.

See list of SIWF v2 Available Delegations.

Requesting Credentials

SIWF v2 supports requesting validated credentials such as a phone number, email, and private graph keys.

See list of SIWF v2 Credentials.

Step 2: Forward the User for Authentication

Option A: SIWF Button SDK

The SIWF SDK provides an easy way to use your signedRequest and display a button for your users.

The example below is with the SIWF SDK for the Web. Guides for Android, iOS that also support handling the callback correctly and more are available in the SIWF SDK documentation.

<!-- Add a button container with data attributes and replace "YOUR_ENCODED_SIGNED_REQUEST" with your "signedRequest" value -->

<div data-siwf-button="YOUR_ENCODED_SIGNED_REQUEST" data-siwf-mode="primary" data-siwf-endpoint="mainnet"></div>

<!-- Include the latest version of the script -->

<script src="https://cdn.jsdelivr.net/npm/@projectlibertylabs/siwf-sdk-web@1.0.1/siwf-sdk-web.min.js"></script>

Option B: Manually

Redirect the user to the URL obtained from the previous step:

window.location.href = '"https://testnet.frequencyaccess.com/siwa/start?signedRequest=eyJyZXF1ZXN0ZWRTaWduYXR1cmVzIjp7InB1YmxpY0tleSI6eyJlbmNvZGVkVmFsdWUiOiJmNmNMNHdxMUhVTngxMVRjdmRBQk5mOVVOWFhveUg0N21WVXdUNTl0elNGUlc4eURIIiwiZW5jb2RpbmciOiJiYXNlNTgiLCJmb3JtYXQiOiJzczU4IiwidHlwZSI6IlNyMjU1MTkifSwic2lnbmF0dXJlIjp7ImFsZ28iOiJTUjI1NTE5IiwiZW5jb2RpbmciOiJiYXNlMTYiLCJlbmNvZGVkVmFsdWUiOiIweDNlMTdhYzM3Yzk3ZWE3M2E3YzM1ZjBjYTJkZTcxYmY3MmE5NjlkYjhiNjQyYzU3ZTI2N2Q4N2Q1OTA3ZGM4MzVmYTJjODI4MTdlODA2YTQ5NGIyY2E5Y2U5MjJmNDM1NDY4M2U4YzAxMzY5NTNlMGZlNWExODJkMzU0NjQ2Yzg4In0sInBheWxvYWQiOnsiY2FsbGJhY2siOiJodHRwOi8vbG9jYWxob3N0OjMwMDAiLCJwZXJtaXNzaW9ucyI6WzUsNyw4LDksMTBdfX0sInJlcXVlc3RlZENyZWRlbnRpYWxzIjpbeyJ0eXBlIjoiVmVyaWZpZWRHcmFwaEtleUNyZWRlbnRpYWwiLCJoYXNoIjpbImJjaXFtZHZteGQ1NHp2ZTVraWZ5Y2dzZHRvYWhzNWVjZjRoYWwydHMzZWV4a2dvY3ljNW9jYTJ5Il19LHsiYW55T2YiOlt7InR5cGUiOiJWZXJpZmllZEVtYWlsQWRkcmVzc0NyZWRlbnRpYWwiLCJoYXNoIjpbImJjaXFlNHFvY3poZnRpY2k0ZHpmdmZiZWw3Zm80aDRzcjVncmNvM29vdnd5azZ5NHluZjQ0dHNpIl19LHsidHlwZSI6IlZlcmlmaWVkUGhvbmVOdW1iZXJDcmVkZW50aWFsIiwiaGFzaCI6WyJiY2lxanNwbmJ3cGMzd2p4NGZld2NlazVkYXlzZGpwYmY1eGppbXo1d251NXVqN2UzdnUydXducSJdfV19XX0&mode=dark"';

For mobile applications, use an embedded browser to handle the redirection smoothly with minimal impact on user experience. SDKs for Android and iOS are available that handle this part for you.

Step 3: Handle the Callback

After the user completes authentication, Frequency Access or other SIWF v2 Service will redirect the user to your

callbackUrl with either an authorizationCode or authorizationPayload.

The Account Service provides an API to validate and process the SIWF v2 authorization:

curl -X POST "https://account-service.internal/v2/accounts/siwf" \

-H "Content-Type: application/json" \

-d '{

"authorizationCode": "received-code",

}'

The response will include the user's credentials, control key, and more:

{

"controlKey": "f6cL4wq1HUNx11TcvdABNf9UNXXoyH47mVUwT59tzSFRW8yDH",

"msaId": "314159265358979323846264338",

"email": "user@example.com",

"phoneNumber": "555-867-5309",

"graphKey": "f6Y86vfvou3d4RGjYJM2k5L7g1HMjVTDMAtVMDh8g67i3VLZi",

"rawCredentials": [

{

"@context": [

"https://www.w3.org/ns/credentials/v2",

"https://www.w3.org/ns/credentials/undefined-terms/v2"

],

"type": [

"VerifiedEmailAddressCredential",

"VerifiableCredential"

],

"issuer": "did:web:frequencyaccess.com",

"validFrom": "2024-08-21T21:28:08.289+0000",

"credentialSchema": {

"type": "JsonSchema",

"id": "https://schemas.frequencyaccess.com/VerifiedEmailAddressCredential/bciqe4qoczhftici4dzfvfbel7fo4h4sr5grco3oovwyk6y4ynf44tsi.json"

},

"credentialSubject": {

"id": "did:key:z6QNucQV4AF1XMQV4kngbmnBHwYa6mVswPEGrkFrUayhttT1",

"emailAddress": "john.doe@example.com",

"lastVerified": "2024-08-21T21:27:59.309+0000"

},

"proof": {

"type": "DataIntegrityProof",

"verificationMethod": "did:web:frequencyaccess.com#z6MkofWExWkUvTZeXb9TmLta5mBT6Qtj58es5Fqg1L5BCWQD",

"cryptosuite": "eddsa-rdfc-2022",

"proofPurpose": "assertionMethod",

"proofValue": "z4jArnPwuwYxLnbBirLanpkcyBpmQwmyn5f3PdTYnxhpy48qpgvHHav6warjizjvtLMg6j3FK3BqbR2nuyT2UTSWC"

}

},

{

"@context": [

"https://www.w3.org/ns/credentials/v2",

"https://www.w3.org/ns/credentials/undefined-terms/v2"

],

"type": [

"VerifiedGraphKeyCredential",

"VerifiableCredential"

],

"issuer": "did:key:z6QNucQV4AF1XMQV4kngbmnBHwYa6mVswPEGrkFrUayhttT1",

"validFrom": "2024-08-21T21:28:08.289+0000",

"credentialSchema": {

"type": "JsonSchema",

"id": "https://schemas.frequencyaccess.com/VerifiedGraphKeyCredential/bciqmdvmxd54zve5kifycgsdtoahs5ecf4hal2ts3eexkgocyc5oca2y.json"

},

"credentialSubject": {

"id": "did:key:z6QNucQV4AF1XMQV4kngbmnBHwYa6mVswPEGrkFrUayhttT1",

"encodedPublicKeyValue": "0xb5032900293f1c9e5822fd9c120b253cb4a4dfe94c214e688e01f32db9eedf17",

"encodedPrivateKeyValue": "0xd0910c853563723253c4ed105c08614fc8aaaf1b0871375520d72251496e8d87",

"encoding": "base16",

"format": "bare",

"type": "X25519",

"keyType": "dsnp.public-key-key-agreement"

},

"proof": {

"type": "DataIntegrityProof",

"verificationMethod": "did:key:z6MktZ15TNtrJCW2gDLFjtjmxEdhCadNCaDizWABYfneMqhA",

"cryptosuite": "eddsa-rdfc-2022",

"proofPurpose": "assertionMethod",

"proofValue": "z2HHWwtWggZfvGqNUk4S5AAbDGqZRFXjpMYAsXXmEksGxTk4DnnkN3upCiL1mhgwHNLkxY3s8YqNyYnmpuvUke7jF"

}

}

]

}

Step 4: Initiate a User Session

There are two identifiers included with the response.

The controlKey will always be returned and can be considered unique for the user for this authentication session.

The msaId is the unique identifier of an account on Frequency, but it may not be available immediately if the user is

new to Frequency (See Waiting for an MSA Id below).

At this point the user is authenticated! Your application should initiate a session and follow standard session management practices.

Waiting for an MSA Id

If you want to wait for confirmation that the Account Service has (if needed) created an MSA Id for the user, you may use this pair of APIs to confirm it:

- Get the MSA Id by

controlKeyGET/v1/accounts/account/{accountId} - Get the delegation by

msaIdandproviderIdGET/v2/delegations/{msaId}/{providerId}

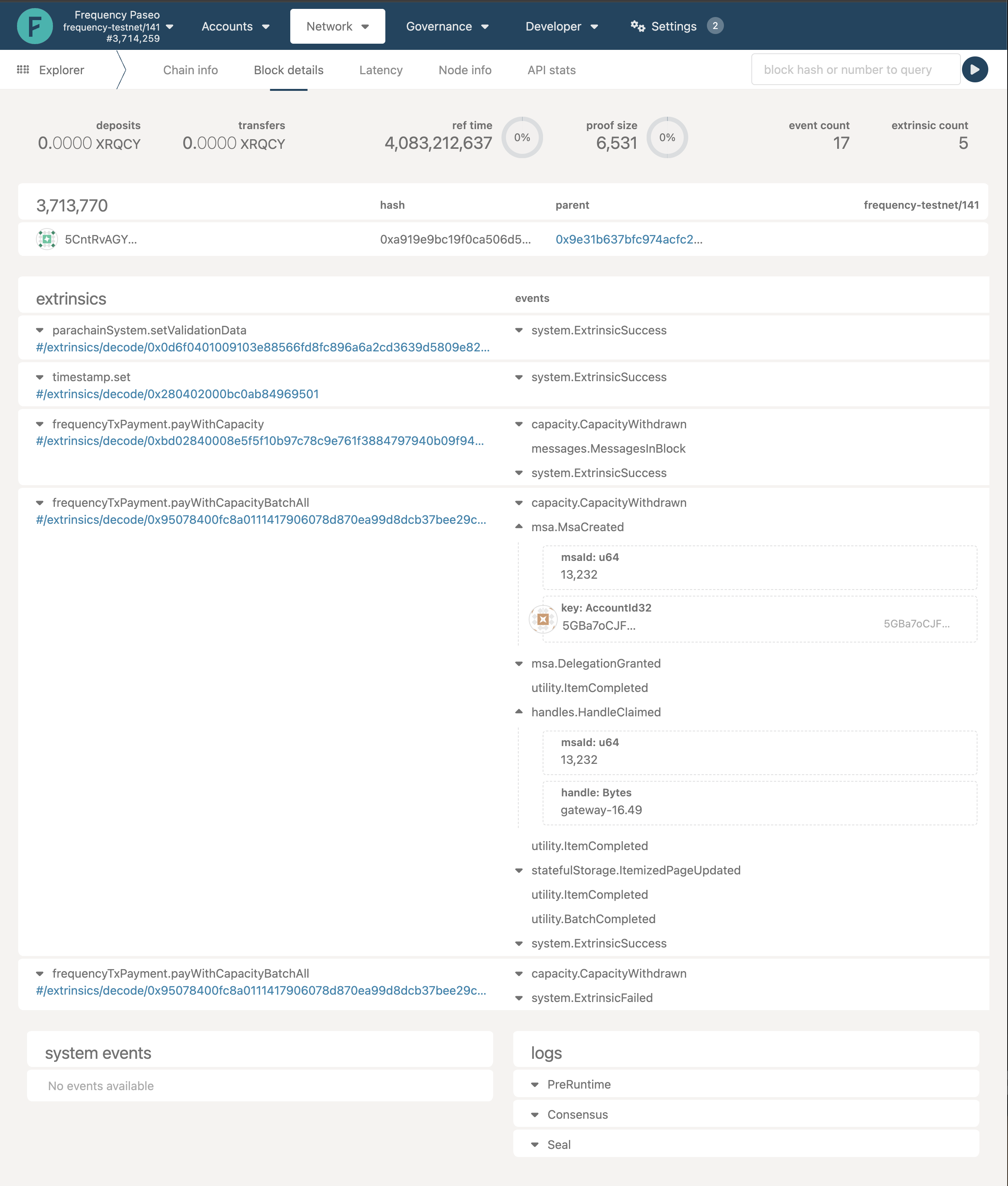

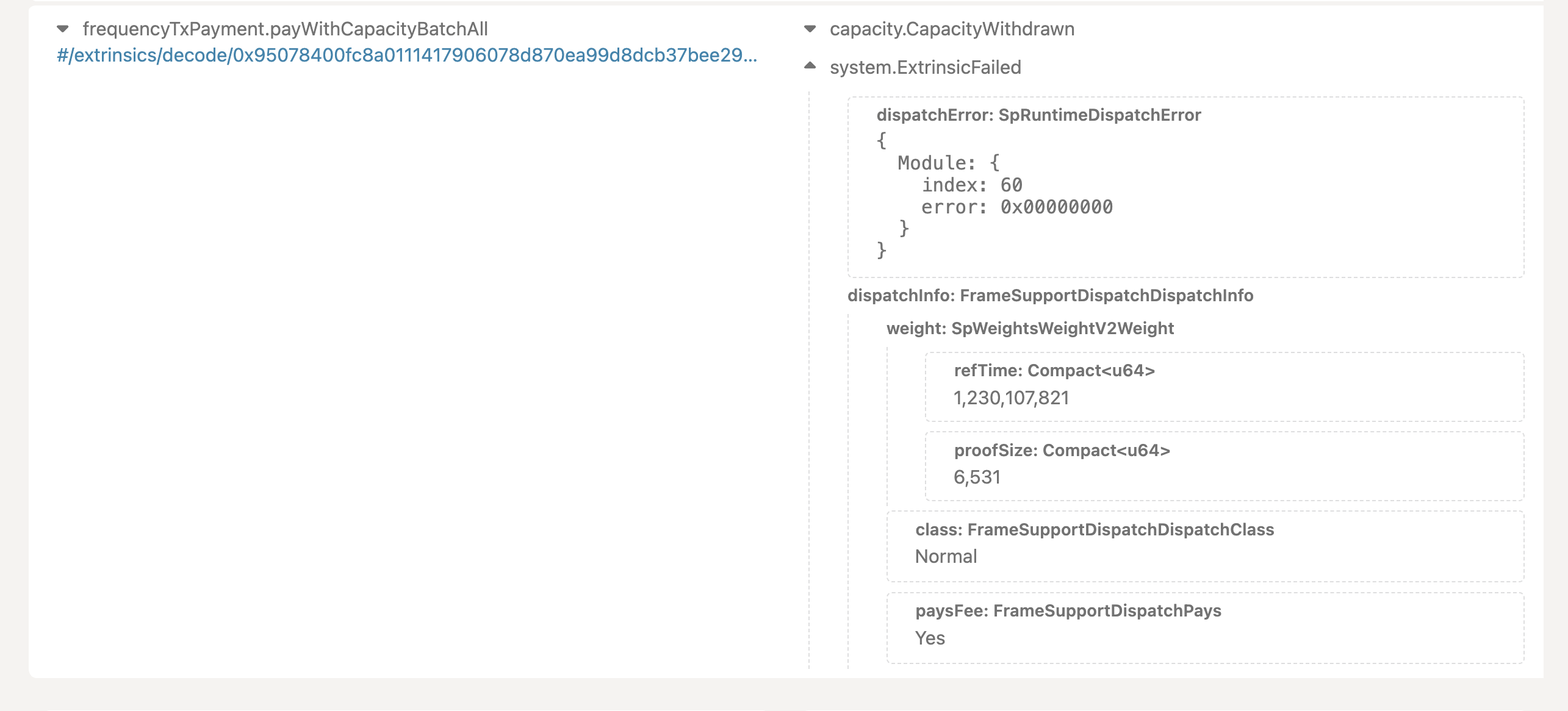

Behind the Scenes

What's happening in each of these systems?

SIWF v2 Service

Connects or provides the user's wallet to sign the needed payloads to prove they are the controller of their account.

Learn more about the SIWF v2 Specification.

Account Service

- Generates a signed SIWF v2 URL using a Provider Control Key.

- Retrieves and validates the response from the SIWF v2 Callback URL.

Frequency

Provides the source of truth and unique identifiers for each account so that accounts are secure.

Core Concepts

Global State

Frequency provides a shared global state to make interoperability and user control fundamental to the internet. Applications provide unique experiences to their users while accessing the content from other applications. Your application can then interact with this shared global state seamlessly, in the same way that modern networking software allows isolated computers to interact seamlessly over a global network moving past the artificial application boundaries.

User Control with Delegation

Users are at the core of every application and network. While users must maintain ultimate control, delegation to your application gives you the ability to provide seamless experiences for your users and their data.

Learn more about Delegation in Frequency Documentation.

Interoperability Between Apps

Frequency enables seamless interaction and data sharing between different applications built on its platform. This interoperability is facilitated by:

- Standardized Protocols: Frequency uses the Decentralized Social Networking Protocol (DSNP), an open Web3 protocol that ensures compatibility between different applications.

- Common Data Structures: By using standardized data structures for user profiles, messages, and other social interactions, Frequency ensures that data can be easily shared and interpreted across different applications.

- User Control: Users can switch between different applications without losing their social connections or content, ensuring continuity and control over their digital presence.

By leveraging these principles and infrastructures, Frequency provides a robust platform for developing decentralized social applications that are secure, scalable, and user-centric.

Learn More

Blockchain Basics

Overview of Blockchain Principles for Social Applications

Blockchain technology is a decentralized ledger system where data is stored across multiple nodes, ensuring transparency, security, and immutability.

Reading the Blockchain

RPCs, Universal State, Finalized vs Non-Finalized

-

RPCs (Remote Procedure Calls): RPCs are used to interact with the blockchain network. They allow users to query the blockchain state, submit transactions, and perform other operations by sending requests to nodes in the network.

-

Universal State: The blockchain maintains a universal state that is agreed upon by all participating nodes. This state includes all the data and transactions that have been validated and confirmed.

-

Finalized vs Non-Finalized:

- Finalized Transactions: Once a transaction is confirmed and included in a block, it is considered finalized. Finalized transactions are immutable and cannot be changed or reverted.

- Non-Finalized Transactions: Transactions that have been submitted to the network but are not yet included in a block are considered non-finalized. They are pending confirmation and can still be altered or rejected.

Writing Changes to the Blockchain

Transactions

- Transactions are the primary means of updating the blockchain state. They can involve transferring tokens, or executing other predefined operations.

Nonces

- Each transaction includes a nonce, a unique number that prevents replay attacks. The nonce ensures that each transaction is processed only once and in the correct order.

Finalization

- Finalization is the process of confirming and adding a transaction to a block. Once a transaction is included in a block and the block is finalized, the transaction becomes immutable.

Block Time

- Block time refers to the interval at which new blocks are added to the blockchain. It determines the speed at which transactions are confirmed and finalized. Shorter block times lead to faster transaction confirmations but can increase the risk of network instability.

Why Blockchain

- Decentralization: Eliminates the need for a central authority, ensuring that users have control over their data and interactions.

- Transparency: All transactions are recorded on a public ledger, providing visibility into the operations of social platforms.

- Security: Advanced cryptographic methods secure user data and interactions, making it difficult for malicious actors to tamper with information.

- Immutability: Once data is recorded on the blockchain, it cannot be altered, ensuring the integrity of user posts, messages, and other social interactions.

- User Empowerment: Users can own their data and have the ability to move freely between different platforms without losing their social connections or content.

Interoperability Between Frequency Social Apps

Frequency enables seamless interaction and data sharing between different social dapps built on its platform. This interoperability is facilitated by:

- Standardized Protocols: Frequency uses the Decentralized Social Networking Protocol (DSNP), an open Web3 protocol that ensures compatibility between different social dapps.

- Common Data Structures: By using standardized data structures for user profiles, messages, and other social interactions, Frequency ensures that data can be easily shared and interpreted across different applications.

- Interoperable APIs: Frequency provides a set of REST APIs that allow developers to build applications capable of interacting with each other, ensuring a cohesive user experience across the ecosystem.

- User Control: Users can switch between different social dapps without losing their social connections or content, ensuring continuity and control over their digital presence.

By leveraging these principles and infrastructures, Frequency provides a robust platform for developing decentralized social applications that are secure, scalable, and user-centric.

Frequency Networks

Mainnet

The Frequency Mainnet is the primary, production-level network where real transactions and interactions occur. It is fully secure and operational, designed to support live applications and services. Users and developers interact with the Mainnet for all production activities, ensuring that all data and transactions are immutable and transparent.

Key Features:

- High Security: Enhanced security protocols to protect user data and transactions.

- Immutability: Once data is written to the Mainnet, it cannot be altered.

- Decentralization: Fully decentralized network ensuring no single point of control.

- Real Transactions: All transactions on the Mainnet are real and involve actual tokens.

URLs

- Public Mainnet RPC URLs

0.rpc.frequency.xyz1.rpc.frequency.xyz

- Polkadot.js Block Explorer

Testnet

The Frequency Testnet is a testing environment that mirrors the Mainnet. It allows developers to test their applications and services in a safe environment without risking real tokens. The Testnet is crucial for identifying and fixing issues before deploying to the Mainnet.

Key Features:

- Safe Testing: Enables developers to test applications without real-world consequences.

- Simulated Environment: Mirrors the Mainnet to provide realistic testing conditions.

- No Real Tokens: Uses test tokens instead of real tokens, eliminating financial risk.

- Frequent Updates: Regularly updated to incorporate the latest features and fixes for testing purposes.

URLs

- Testnet RPC URL

0.rpc.testnet.amplica.io

- Polkadot.js Block Explorer

- Testnet Token Faucet

Local

The Local network setup is a private, local instance of the Frequency blockchain that developers can run on their own machines. It is used for development, debugging, and testing in a controlled environment. The Local network setup provides the flexibility to experiment with new features and configurations without affecting the Testnet or Mainnet.

Key Features:

- Local Development: Allows developers to work offline and test changes quickly.

- Customizable: Developers can configure the Local network to suit their specific needs.

- Isolation: Isolated from the Mainnet and Testnet, ensuring that testing does not interfere with live networks.

- Rapid Iteration: Facilitates rapid development and iteration, allowing for quick testing and debugging.

URLs

- Local Node: Typically run on

http://localhost:9933or a similar local endpoint depending on the setup. - Documentation: Frequency Docs

- GitHub Repository: Frequency GitHub

- Project Website: Frequency Website

Using Polkadot.js Explorer

To interact with the Frequency networks using the Polkadot.js Explorer, follow these steps:

1. Open Polkadot.js Explorer:

- Go to Polkadot.js Explorer.

2. Select Frequency Network:

- Click on the network selection dropdown at the top left corner of the page.

- Choose "POLKADOT & PARACHAINS -> Frequency Polkadot Parachain" for the main network.

- Choose "TEST PASEO & PARACHAINS -> Frequency Paseo Parachain" for the test network.

- For local development, connect to your local node by selecting "DEVELOPMENT -> Local Node or Custom Endpoint" and entering the URL of your local node (e.g.,

http://localhost:9933).

3. Connect Your Wallet:

- Ensure your Polkadot-supported wallet is connected.

- You will be able to see and interact with your accounts and transactions on the selected Frequency network.

By using these steps, you can easily switch between the different Frequency networks and manage your blockchain activities efficiently.

Frequency Developer Gateway Architecture

Authentication

Gateway and Frequency provide authentication, but not session management. Using cryptographic signatures, you will get proof the user is authenticated without passwords or other complex identity systems to implement. Your application still must manage sessions as is best for your custom needs.

What does it mean for Applications?

- Web2 APIs: Typically use OAuth, API keys, or session tokens for authentication.

- Frequency APIs: Utilize cryptographic signatures for secure authentication, ensuring user identity and data integrity.

Sign In With Frequency (SIWF)

Sign In With Frequency (SIWF) is a method for authenticating users in the Frequency ecosystem. SIWF allows users to authenticate using their Frequency accounts, providing a secure and decentralized way to manage identities.

- SIWF v2 Implementation: Users sign in using an SIWF v2 Authentication Service that uses redirect and callback URLs. The Authentication Service authenticates and generate cryptographic signatures for authentication.

Account Service

The Account Service in Gateway handles user account management, including creating accounts, managing keys, and delegating permissions. This service replaces traditional user models with decentralized identities and provides a robust framework for user authentication and authorization.

Data Storage

What does it mean for Frequency?

- Web2 APIs: Data is stored in centralized databases managed by the service provider.

- Frequency APIs: Data is stored on the decentralized blockchain (metadata) and off-chain storage (payload), ensuring transparency and user control.

IPFS

InterPlanetary File System (IPFS) is a decentralized storage solution used in the Frequency ecosystem to store large data payloads off-chain. IPFS provides a scalable and resilient way to manage data, ensuring that it is accessible and verifiable across the network.

- Usage in Gateway: Content Publishing Service uses IPFS to store user-generated content such as images, videos, and documents. The metadata associated with this content is stored on the blockchain, while the actual files are stored on IPFS, ensuring decentralization and availability.

Blockchain

The Frequency blockchain stores metadata and transaction records, providing a secure and user-controlled data store. This ensures that all interactions are transparent and traceable, enhancing trust in the system.

- Usage in Gateway: Metadata for user actions, such as content publication, follows/unfollows, and other social interactions, are stored on the blockchain. This ensures that all actions are verifiable and under user control.

Local/Application Data

For efficiency and performance, certain data may be stored locally or within application-specific storage systems. This allows for quick access and manipulation of frequently used data while ensuring that critical information remains secure on the blockchain.

Application / Middleware

Hooking Up All the Microservices

Gateway is designed to support a modular and microservices-based approach. Each service (e.g., Account Service, Content Publishing Service) operates independently but can interact through well-defined APIs.

Here is Where Your Custom Code Goes!

Developers can integrate their custom code within this modular framework, extending the functionality of the existing services or creating new services that interact with the Frequency ecosystem.

Standard Services Gateway Uses

Redis

Redis is a key-value store used for caching and fast data retrieval. It is often employed in microservices architectures to manage state and session data efficiently.

- Why Redis: Redis provides low-latency access to frequently used data, making it ideal for applications that require real-time performance.

- Usage in Gateway: Redis can be used to cache frequently accessed data, manage session states, and optimize database queries.

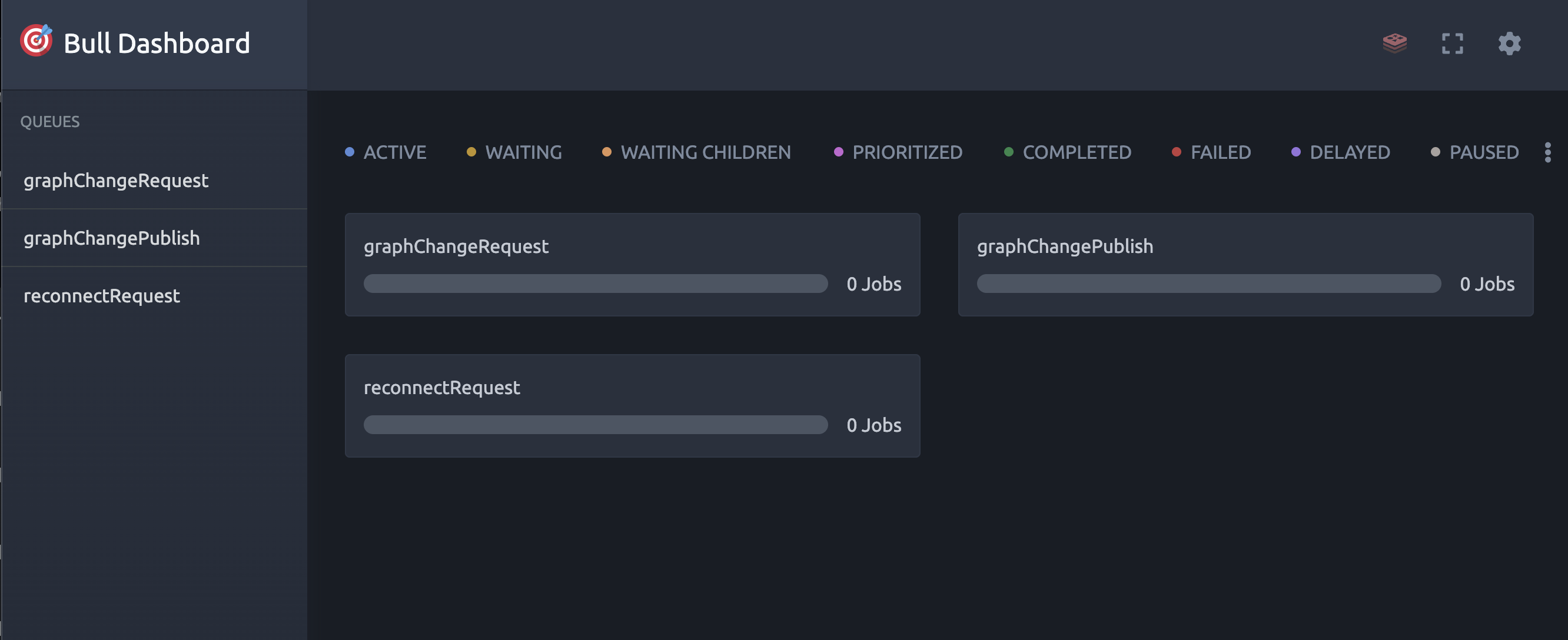

BullMQ

BullMQ is a Node.js library for creating robust job queues with Redis.

- Structure for Redis Queues: BullMQ enhances Redis by providing a reliable and scalable way to manage background jobs and task queues, ensuring that tasks are processed efficiently and reliably.

- Usage in Gateway: BullMQ can be used to handle background processing tasks such as sending notifications, processing user actions, and managing content updates.

IPFS Kubo API

Kubo is an IPFS implementation and standard API designed for high performance and scalability.

- Usage in Gateway: Kubo IPFS is used to manage the storage and retrieval of large files in the Frequency ecosystem, ensuring that data is decentralized and accessible.

Migrating from Web2 to Web3

Step-by-Step Migration Guide

-

Assess Your Current Web2 Application

- Identify core functionalities.

- Analyze data structures.

- Review user authentication.

-

Understand Frequency and Gateway Services

- Learn about Frequency blockchain architecture.

- Understand Gateway services (Account, Content Publishing, Content Watcher).

-

Set Up Your Development Environment

- Install Docker, Node.js, and a Web3 wallet.

- Clone the Gateway service repositories.

- Set up Docker containers.

-

Configure Gateway Services

- Create and configure

.envfiles with necessary environment variables.

- Create and configure

-

Migrate User Authentication

- Integrate Web3 authentication using MetaMask or another Web3 wallet.

- Configure MetaMask to connect to the Frequency TestNet.

-

Migrate Data Storage

- Transition to decentralized storage.

- Use Frequency blockchain for metadata and off-chain storage for payload data.

-

Migrate Core Functionalities

- Use the Content Publishing Service for creating feeds and posting content.

- Use the Content Watcher Service for retrieving the latest state of feeds and reactions.

-

Test and Validate

- Perform functional, performance, and security testing.

-

Optimize and Deploy

- Optimize your application for performance on the Frequency blockchain.

- Deploy your migrated application to the production environment.

-

Educate Your Users

- Provide documentation and support for user onboarding.

- Establish a feedback loop to gather user feedback and make improvements.

By following these steps, you can successfully migrate your Web2 application to the Gateway and Frequency Web3 environment.

Services

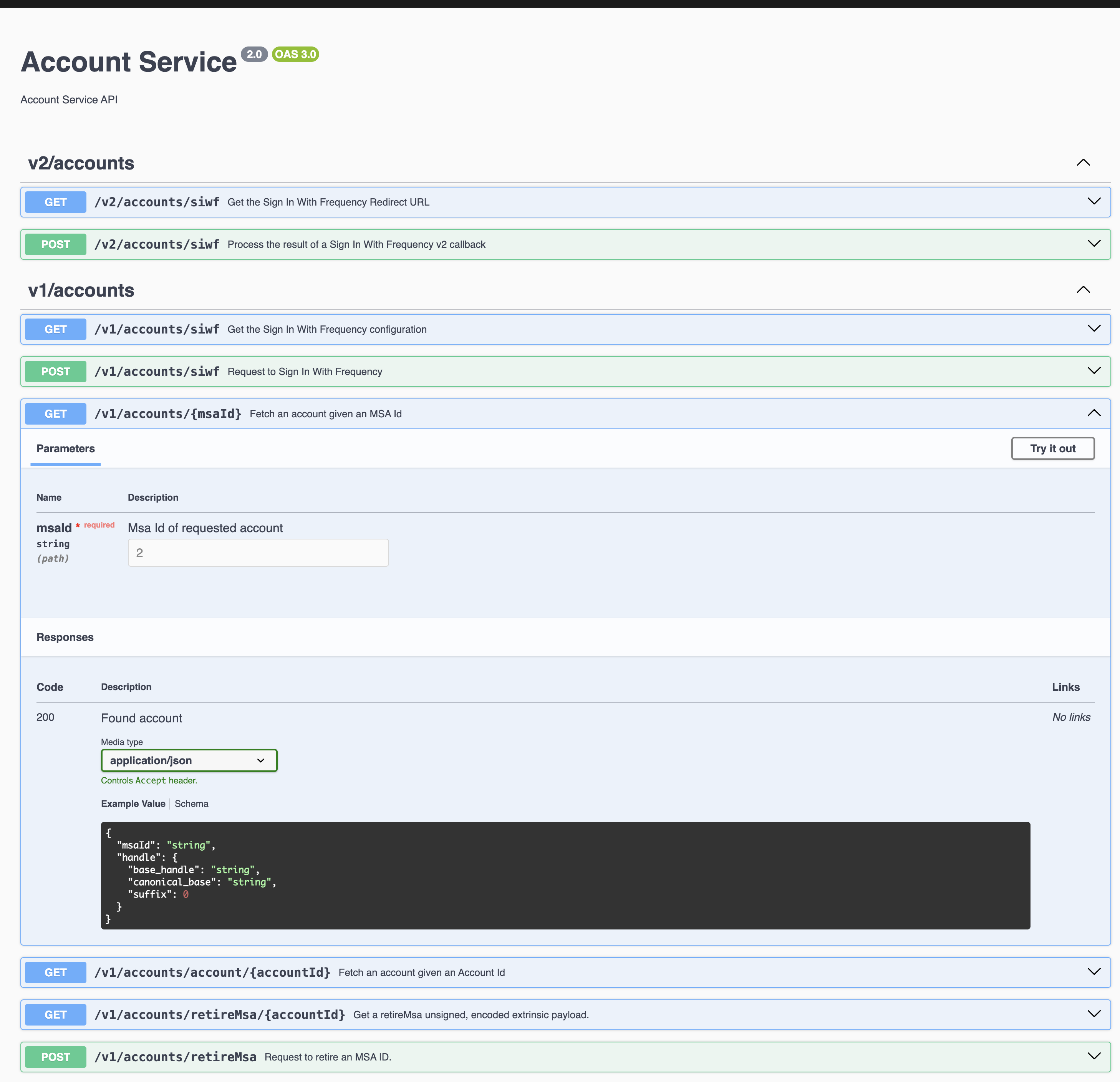

Account Service

The Account Service enables easy interaction with accounts on Frequency.

Accounts are defined as an msaId (64-bit identifier) and can contain additional information such as a handle, keys, and more.

- Account authentication and creation using SIWF

- Delegation management

- User Handle creation and retrieval

- User key retrieval and management

See Account Service Details & API Reference

Content Publishing Service

The Content Publishing Service enables the creation of new content-related activity on Frequency.

- Create posts to publicly broadcast

- Create replies to posts

- Create reactions to posts

- Create updates to existing content

- Request deletion of content

- Store and attach media with IPFS

See Content Publishing Service Details & API Reference

Content Watcher Service

The Content Watcher Service enables client applications to process content found on Frequency by registering for webhook notifications, triggered when relevant content is found, eliminating the need to interact with the chain for new content.

- Parses and validates Frequency content

- Filterable webhooks

- Scanning control

See Content Watcher Service Details & API Reference

Account Service

The Account Service provides functionalities related to user accounts on the Frequency network. It includes endpoints for managing user authentication, account details, delegation, keys, and handles.

API Reference

Configuration

ℹ️ Feel free to adjust your environment variables to taste. This application recognizes the following environment variables:

| Name | Description | Range/Type | Required? | Default |

|---|---|---|---|---|

API_BODY_JSON_LIMIT | Api json body size limit in string (some examples: 100kb or 5mb or etc) | string | 1mb | |

API_PORT | HTTP port that the application listens on | 1025 - 65535 | 3000 | |

API_TIMEOUT_MS | Overall API timeout limit in milliseconds. This is the maximum time allowed for any API request to complete. Any HTTP_RESPONSE_TIMEOUT_MS value must be less than this value | > 0 | 30000 | |

BLOCKCHAIN_SCAN_INTERVAL_SECONDS | How many seconds to delay between successive scans of the chain for new content (after end of chain is reached) | > 0 | 12 | |

CACHE_KEY_PREFIX | Prefix to use for Redis cache keys | string | Y | |

CAPACITY_LIMIT | Maximum amount of provider capacity this app is allowed to use (per epoch) type: 'percentage' 'amount' value: number (may be percentage, ie '80', or absolute amount of capacity) | JSON (example) | Y | |

FREQUENCY_API_WS_URL | Blockchain API Websocket URL | ws(s): URL | Y | |

FREQUENCY_TIMEOUT_SECS | Frequency chain connection timeout limit; app will terminate if disconnected longer | integer | 10 | |

HEALTH_CHECK_MAX_RETRIES | Number of /health endpoint failures allowed before marking the provider webhook service down | >= 0 | 20 | |

HEALTH_CHECK_MAX_RETRY_INTERVAL_SECONDS | Number of seconds to retry provider webhook /health endpoint when failing | > 0 | 64 | |

HEALTH_CHECK_SUCCESS_THRESHOLD | Minimum number of consecutive successful calls to the provider webhook /health endpoint before it is marked up again | > 0 | 10 | |

HTTP_RESPONSE_TIMEOUT_MS | Timeout in milliseconds to wait for a response as part of a request to an HTTP endpoint. Must be less than API_TIMEOUT_MS | > 0 and < API_TIMEOUT_MS | 3000 | |

LOG_LEVEL | Verbosity level for logging | trace | debug | info | warn | error | fatal | N | info |

PRETTY | Whether logs should be pretty-printed on one line or multiple lines, or plain JSON | true | false | compact | N | false |

PROVIDER_ACCESS_TOKEN | An optional bearer token authentication to the provider webhook | string | ||

PROVIDER_ACCOUNT_SEED_PHRASE | Seed phrase or URI or Ethereum private key that is used for provider MSA control key | string | Y | |

PROVIDER_ID | Provider MSA Id | integer | Y | |

RATE_LIMIT_TTL | Rate limiting time window in milliseconds. Requests are limited within this time window | > 0 | 60000 | |

RATE_LIMIT_MAX_REQUESTS | Maximum number of requests allowed per time window (TTL) | > 0 | 100 | |

RATE_LIMIT_SKIP_SUCCESS | Whether to skip counting successful requests (2xx status codes) towards the rate limit | boolean | false | |

RATE_LIMIT_SKIP_FAILED | Whether to skip counting failed requests (4xx/5xx status codes) towards the rate limit | boolean | false | |

RATE_LIMIT_BLOCK_ON_EXCEEDED | Whether to block requests when rate limit is exceeded (true) or just log them (false) | boolean | true | |

RATE_LIMIT_KEY_PREFIX | Redis key prefix for rate limiting storage. Used to namespace rate limiting data in Redis | string | account:throttle | |

REDIS_OPTIONS | Additional Redis options. See ioredis configuration | JSON string | Y (either this or REDIS_URL) | '{"commandTimeout":10000}' |

REDIS_URL | Connection URL for Redis | URL | Y (either this or REDIS_OPTIONS) | |

SIWF_NODE_RPC_URL | Blockchain node address resolvable from the client browser, used for SIWF | http(s): URL | Y | |

SIWF_URL | SIWF v1: URL for Sign In With Frequency V1 UI | URL | https://ProjectLibertyLabs.github.io/siwf/v1/ui | |

SIWF_V2_URI_VALIDATION | SIWF v2: Domain (formatted as a single URI or JSON array of URIs) to validate signin requests (*Required if using Sign In with Frequency v2) | Domain (Example: '["https://www.your-app.com", "example://login", "localhost"]') | * | |

SIWF_V2_URL | SIWF v2: URL for Sign In With Frequency V2 Redirect URL | URL | Frequency Access | |

TRUST_UNFINALIZED_BLOCKS | Whether to examine blocks that have not been finalized when tracking extrinsic completion | boolean | false | |

VERBOSE_LOGGING | Enable 'verbose' log level | boolean | N | false |

WEBHOOK_BASE_URL | Base URL for provider webhook endpoints | URL | Y | |

WEBHOOK_FAILURE_THRESHOLD | Number of failures allowed in the provider webhook before the service is marked down | > 0 | 3 | |

WEBHOOK_RETRY_INTERVAL_SECONDS | Number of seconds between provider webhook retry attempts when failing | > 0 | 10 |

Best Practices

- Secure Authentication: Always use secure methods (e.g., JWT tokens) for authentication to protect user data.

- Validate Inputs: Ensure all input data is validated to prevent injection attacks and other vulnerabilities.

- Rate Limiting: Implement rate limiting to protect the service from abuse and ensure fair usage.

Account Service

API Reference

Open Direct API Reference Page

Path Table

| Method | Path | Description |

|---|---|---|

| GET | /v2/accounts/siwf | Get the Sign In With Frequency Redirect URL |

| POST | /v2/accounts/siwf | Process the result of a Sign In With Frequency v2 callback |

| GET | /v1/accounts/{msaId} | Fetch an account given an MSA Id |

| GET | /v1/accounts/account/{accountId} | Fetch an account given an Account Id |

| GET | /v1/accounts/retireMsa/{accountId} | Get a retireMsa unsigned, encoded extrinsic payload. |

| POST | /v1/accounts/retireMsa | Request to retire an MSA ID. |

| GET | /v2/delegations/{msaId} | Get all delegation information associated with an MSA Id |

| GET | /v2/delegations/{msaId}/{providerId} | Get an MSA's delegation information for a specific provider |

| GET | /v1/delegation/{msaId} | Get the delegation information associated with an MSA Id |

| GET | /v1/delegation/revokeDelegation/{accountId}/{providerId} | Get a properly encoded RevokeDelegationPayload that can be signed |

| POST | /v1/delegation/revokeDelegation | Request to revoke a delegation |

| POST | /v1/handles | Request to create a new handle for an account |

| POST | /v1/handles/change | Request to change a handle |

| GET | /v1/handles/change/{newHandle} | Get a properly encoded ClaimHandlePayload that can be signed. |

| GET | /v1/handles/{msaId} | Fetch a handle given an MSA Id |

| POST | /v1/keys/add | Add new control keys for an MSA Id |

| GET | /v1/keys/{msaId} | Fetch public keys given an MSA Id |

| GET | /v1/keys/publicKeyAgreements/getAddKeyPayload | Get a properly encoded StatefulStorageItemizedSignaturePayloadV2 that can be signed. |

| POST | /v1/keys/publicKeyAgreements | Request to add a new public Key |

| POST | /v1/ics/{accountId}/publishAll | |

| GET | /v1/frequency/blockinfo | Get information about current block |

| GET | /healthz | Check the health status of the service |

| GET | /livez | Check the live status of the service |

| GET | /readyz | Check the ready status of the service |

| GET | /metrics |

Reference Table

Path Details

[GET]/v2/accounts/siwf

-

Summary

Get the Sign In With Frequency Redirect URL -

Operation id

AccountsControllerV2_getRedirectUrl_v2

Parameters(Query)

credentials?: string[]

permissions?: string[]

callbackUrl: string

Responses

- 200 SIWF Redirect URL

application/json

{

// The base64url encoded JSON stringified signed request

signedRequest: string

// A publically available Frequency node for SIWF dApps to connect to the correct chain

frequencyRpcUrl: string

// The compiled redirect url with all the parameters already built in

redirectUrl: string

}

[POST]/v2/accounts/siwf

-

Summary

Process the result of a Sign In With Frequency v2 callback -

Operation id

AccountsControllerV2_postSignInWithFrequency_v2

RequestBody

- application/json

{

// The code returned from the SIWF v2 Authentication service that can be exchanged for the payload. Required unless an `authorizationPayload` is provided.

authorizationCode?: string

// The SIWF v2 Authentication payload as a JSON stringified and base64url encoded value. Required unless an `authorizationCode` is provided.

authorizationPayload?: string

}

Responses

- 200 Signed in successfully

application/json

{

// The ss58 encoded MSA Control Key of the login.

controlKey: string

// ReferenceId of an associated sign-up request queued task, if applicable

signUpReferenceId?: string

// Status of associated sign-up request queued task, if applicable

signUpStatus?: string

// The user's MSA Id, if one is already created. Will be empty if it is still being processed.

msaId?: string

// The users's validated email

email?: string

// The users's validated SMS/Phone Number

phoneNumber?: string

// The users's Private Graph encryption key.

graphKey?: #/components/schemas/GraphKeySubject

// The user's recovery secret.

recoverySecret?: string

rawCredentials: {

}[]

}

[GET]/v1/accounts/{msaId}

-

Summary

Fetch an account given an MSA Id -

Operation id

AccountsControllerV1_getAccountForMsa_v1

Responses

- 200 Found account

application/json

{

msaId: string

handle: {

base_handle: string

canonical_base: string

suffix: number

}

}

[GET]/v1/accounts/account/{accountId}

-

Summary

Fetch an account given an Account Id -

Operation id

AccountsControllerV1_getAccountForAccountId_v1

Responses

- 200 Found account

application/json

{

msaId: string

handle: {

base_handle: string

canonical_base: string

suffix: number

}

}

[GET]/v1/accounts/retireMsa/{accountId}

-

Summary

Get a retireMsa unsigned, encoded extrinsic payload. -

Operation id

AccountsControllerV1_getRetireMsaPayload_v1

Responses

- 200 Created extrinsic

application/json

{

// Hex-encoded representation of the "RetireMsa" extrinsic

encodedExtrinsic: string

// payload to be signed

payloadToSign: string

// AccountId in hex or SS58 format

accountId: string

}

[POST]/v1/accounts/retireMsa

-

Summary

Request to retire an MSA ID. -

Operation id

AccountsControllerV1_postRetireMsa_v1

RequestBody

- application/json

{

// Hex-encoded representation of the "RetireMsa" extrinsic

encodedExtrinsic: string

// payload to be signed

payloadToSign: string

// AccountId in hex or SS58 format

accountId: string

// signature of the owner

signature: string

}

Responses

- 201 Created and queued request to retire an MSA ID

application/json

{

// Job state

state?: string

referenceId: string

}

[GET]/v2/delegations/{msaId}

-

Summary

Get all delegation information associated with an MSA Id -

Operation id

DelegationsControllerV2_getDelegation_v2

Responses

- 200 Found delegation information

application/json

{

msaId: string

delegations: {

providerId: string

schemaDelegations: {

schemaId: number

revokedAtBlock?: number

}[]

revokedAtBlock?: number

}[]

}

[GET]/v2/delegations/{msaId}/{providerId}

-

Summary

Get an MSA's delegation information for a specific provider -

Operation id

DelegationsControllerV2_getProviderDelegation_v2

Responses

- 200 Found delegation information

application/json

{

msaId: string

delegations: {

providerId: string

schemaDelegations: {

schemaId: number

revokedAtBlock?: number

}[]

revokedAtBlock?: number

}[]

}

[GET]/v1/delegation/{msaId}

-

Summary

Get the delegation information associated with an MSA Id -

Operation id

DelegationControllerV1_getDelegation_v1

Responses

- 200 Found delegation information

application/json

{

providerId: string

schemaPermissions: {

}

revokedAt: {

}

}

[GET]/v1/delegation/revokeDelegation/{accountId}/{providerId}

-

Summary

Get a properly encoded RevokeDelegationPayload that can be signed -

Operation id

DelegationControllerV1_getRevokeDelegationPayload_v1

Responses

- 200 Returned an encoded RevokeDelegationPayload for signing

application/json

{

// AccountId in hex or SS58 format

accountId: string

// MSA Id of the provider to whom the requesting user wishes to delegate

providerId: string

// Hex-encoded representation of the "revokeDelegation" extrinsic

encodedExtrinsic: string

// payload to be signed

payloadToSign: string

}

[POST]/v1/delegation/revokeDelegation

-

Summary

Request to revoke a delegation -

Operation id

DelegationControllerV1_postRevokeDelegation_v1

RequestBody

- application/json

{

// AccountId in hex or SS58 format

accountId: string

// MSA Id of the provider to whom the requesting user wishes to delegate

providerId: string

// Hex-encoded representation of the "revokeDelegation" extrinsic

encodedExtrinsic: string

// payload to be signed

payloadToSign: string

// signature of the owner

signature: string

}

Responses

- 201 Created and queued request to revoke a delegation

application/json

{

// Job state

state?: string

referenceId: string

}

[POST]/v1/handles

-

Summary

Request to create a new handle for an account -

Operation id

HandlesControllerV1_createHandle_v1

RequestBody

- application/json

{

// AccountId in hex or SS58 format

accountId: string

payload: {

// base handle in the request

baseHandle: string

// expiration block number for this payload

expiration: number

}

// proof is the signature for the payload

proof: string

}

Responses

- 200 Handle creation request enqueued

application/json

{

// Job state

state?: string

referenceId: string

}

- 400 Invalid handle provided or provided signature is not valid for the payload!

[POST]/v1/handles/change

-

Summary

Request to change a handle -

Operation id

HandlesControllerV1_changeHandle_v1

RequestBody

- application/json

{

// AccountId in hex or SS58 format

accountId: string

payload: {

// base handle in the request

baseHandle: string

// expiration block number for this payload

expiration: number

}

// proof is the signature for the payload

proof: string

}

Responses

- 200 Handle change request enqueued

application/json

{

// Job state

state?: string

referenceId: string

}

- 400 Invalid handle provided or provided signature is not valid for the payload!

[GET]/v1/handles/change/{newHandle}

-

Summary

Get a properly encoded ClaimHandlePayload that can be signed. -

Operation id

HandlesControllerV1_getChangeHandlePayload_v1

Responses

- 200 Returned an encoded ClaimHandlePayload for signing

application/json

{

payload: {

// base handle in the request

baseHandle: string

// expiration block number for this payload

expiration: number

}

// Raw encodedPayload is scale encoded of payload in hex format

encodedPayload: string

}

- 400 Invalid handle provided

[GET]/v1/handles/{msaId}

-

Summary

Fetch a handle given an MSA Id -

Operation id

HandlesControllerV1_getHandle_v1

Responses

- 200 Found a handle

application/json

{

base_handle: string

canonical_base: string

suffix: number

}

[POST]/v1/keys/add

-

Summary

Add new control keys for an MSA Id -

Operation id

KeysControllerV1_addKey_v1

RequestBody

- application/json

{

// msaOwnerAddress representing the target of this request

msaOwnerAddress: string

// msaOwnerSignature is the signature by msa owner

msaOwnerSignature: string

// newKeyOwnerSignature is the signature with new key

newKeyOwnerSignature: string

payload: {

// MSA Id of the user requesting the new key

msaId: string

// expiration block number for this payload

expiration: number

// newPublicKey in hex format

newPublicKey: string

}

}

Responses

- 200 Found public keys

application/json

{

// Job state

state?: string

referenceId: string

}

[GET]/v1/keys/{msaId}

-

Summary

Fetch public keys given an MSA Id -

Operation id

KeysControllerV1_getKeys_v1

Responses

- 200 Found public keys

application/json

{

msaKeys: {

}

}

[GET]/v1/keys/publicKeyAgreements/getAddKeyPayload

-

Summary

Get a properly encoded StatefulStorageItemizedSignaturePayloadV2 that can be signed. -

Operation id

KeysControllerV1_getPublicKeyAgreementsKeyPayload_v1

Parameters(Query)

msaId: string

newKey: string

Responses

- 200 Returned an encoded StatefulStorageItemizedSignaturePayloadV2 for signing

application/json

{

payload: {

actions: {

// Action Item type

type: #/components/schemas/ItemActionType

// encodedPayload to be added

encodedPayload?: string

// index of the item to be deleted

index?: number

}[]

// schemaId related to the payload

schemaId: number

// targetHash related to the stateful storage

targetHash: number

// The block number at which the signed proof will expire

expiration: number

}

// Raw encodedPayload to be signed

encodedPayload: string

}

[POST]/v1/keys/publicKeyAgreements

-

Summary

Request to add a new public Key -

Operation id

KeysControllerV1_addNewPublicKeyAgreements_v1

RequestBody

- application/json

{

// AccountId in hex or SS58 format

accountId: string

payload: {

actions: {

// Action Item type

type: #/components/schemas/ItemActionType

// encodedPayload to be added

encodedPayload?: string

// index of the item to be deleted

index?: number

}[]

// schemaId related to the payload

schemaId: number

// targetHash related to the stateful storage

targetHash: number

// The block number at which the signed proof will expire

expiration: number

}

// proof is the signature for the payload

proof: string

}

Responses

- 200 Add new key request enqueued

application/json

{

// Job state

state?: string

referenceId: string

}

[POST]/v1/ics/{accountId}/publishAll

- Operation id

IcsControllerV1_publishAll_v1

RequestBody

- application/json

{

addIcsPublicKeyPayload: {

// AccountId in hex or SS58 format

accountId: string

payload: {

actions: {

// Action Item type

type: #/components/schemas/ItemActionType

// encodedPayload to be added

encodedPayload?: string

// index of the item to be deleted

index?: number

}[]

// schemaId related to the payload

schemaId: number

// targetHash related to the stateful storage

targetHash: number

// The block number at which the signed proof will expire

expiration: number

}

// proof is the signature for the payload

proof: string

}

addContextGroupPRIDEntryPayload:#/components/schemas/AddNewPublicKeyAgreementRequestDto

addContentGroupMetadataPayload: {

// AccountId in hex or SS58 format that signed the payload

accountId: string

// The signature of the payload

signature: string

// The payload that `signature` signed

payload: #/components/schemas/UpsertedPageDto

}

}

Responses

- 202

[GET]/v1/frequency/blockinfo

-

Summary

Get information about current block -

Operation id

BlockInfoController_blockInfo_v1

Responses

- 200 Block information retrieved

[GET]/healthz

-

Summary

Check the health status of the service -

Operation id

HealthController_healthz

Responses

- 200 Service is healthy

[GET]/livez

-

Summary

Check the live status of the service -

Operation id

HealthController_livez

Responses

- 200 Service is live

[GET]/readyz

-

Summary

Check the ready status of the service -

Operation id

HealthController_readyz

Responses

- 200 Service is ready

[GET]/metrics

- Operation id

PrometheusController_index

Responses

- 200

References

#/components/schemas/WalletV2RedirectResponseDto

{

// The base64url encoded JSON stringified signed request

signedRequest: string

// A publically available Frequency node for SIWF dApps to connect to the correct chain

frequencyRpcUrl: string

// The compiled redirect url with all the parameters already built in

redirectUrl: string

}

#/components/schemas/WalletV2LoginRequestDto

{

// The code returned from the SIWF v2 Authentication service that can be exchanged for the payload. Required unless an `authorizationPayload` is provided.

authorizationCode?: string

// The SIWF v2 Authentication payload as a JSON stringified and base64url encoded value. Required unless an `authorizationCode` is provided.

authorizationPayload?: string

}

#/components/schemas/GraphKeySubject

{

// The id type of the VerifiedGraphKeyCredential.

id: string

// The encoded public key.

encodedPublicKeyValue: string

// The encoded private key. WARNING: This is sensitive user information!

encodedPrivateKeyValue: string

// How the encoded keys are encoded. Only "base16" (aka hex) currently.

encoding: string

// Any addition formatting options. Only: "bare" currently.

format: string

// The encryption key algorithm.

type: string

// The DSNP key type.

keyType: string

}

#/components/schemas/WalletV2LoginResponseDto

{

// The ss58 encoded MSA Control Key of the login.

controlKey: string

// ReferenceId of an associated sign-up request queued task, if applicable

signUpReferenceId?: string

// Status of associated sign-up request queued task, if applicable

signUpStatus?: string

// The user's MSA Id, if one is already created. Will be empty if it is still being processed.

msaId?: string

// The users's validated email

email?: string

// The users's validated SMS/Phone Number

phoneNumber?: string

// The users's Private Graph encryption key.

graphKey?: #/components/schemas/GraphKeySubject

// The user's recovery secret.

recoverySecret?: string

rawCredentials: {

}[]

}

#/components/schemas/HandleResponseDto

{

base_handle: string

canonical_base: string

suffix: number

}

#/components/schemas/AccountResponseDto

{

msaId: string

handle: {

base_handle: string

canonical_base: string

suffix: number

}

}

#/components/schemas/RetireMsaPayloadResponseDto

{

// Hex-encoded representation of the "RetireMsa" extrinsic

encodedExtrinsic: string

// payload to be signed

payloadToSign: string

// AccountId in hex or SS58 format

accountId: string

}

#/components/schemas/RetireMsaRequestDto

{

// Hex-encoded representation of the "RetireMsa" extrinsic

encodedExtrinsic: string

// payload to be signed

payloadToSign: string

// AccountId in hex or SS58 format

accountId: string

// signature of the owner

signature: string

}

#/components/schemas/TransactionResponse

{

// Job state

state?: string

referenceId: string

}

#/components/schemas/SchemaDelegation

{

schemaId: number

revokedAtBlock?: number

}

#/components/schemas/Delegation

{

providerId: string

schemaDelegations: {

schemaId: number

revokedAtBlock?: number

}[]

revokedAtBlock?: number

}

#/components/schemas/DelegationResponseV2

{

msaId: string

delegations: {

providerId: string

schemaDelegations: {

schemaId: number

revokedAtBlock?: number

}[]

revokedAtBlock?: number

}[]

}

#/components/schemas/u32

{

}

#/components/schemas/DelegationResponse

{

providerId: string

schemaPermissions: {

}

revokedAt: {

}

}

#/components/schemas/RevokeDelegationPayloadResponseDto

{

// AccountId in hex or SS58 format

accountId: string

// MSA Id of the provider to whom the requesting user wishes to delegate

providerId: string

// Hex-encoded representation of the "revokeDelegation" extrinsic

encodedExtrinsic: string

// payload to be signed

payloadToSign: string

}

#/components/schemas/RevokeDelegationPayloadRequestDto

{

// AccountId in hex or SS58 format

accountId: string

// MSA Id of the provider to whom the requesting user wishes to delegate

providerId: string

// Hex-encoded representation of the "revokeDelegation" extrinsic

encodedExtrinsic: string

// payload to be signed

payloadToSign: string

// signature of the owner

signature: string

}

#/components/schemas/HandlePayloadDto

{

// base handle in the request

baseHandle: string

// expiration block number for this payload

expiration: number

}

#/components/schemas/HandleRequestDto

{

// AccountId in hex or SS58 format

accountId: string

payload: {

// base handle in the request

baseHandle: string

// expiration block number for this payload

expiration: number

}

// proof is the signature for the payload

proof: string

}

#/components/schemas/ChangeHandlePayloadRequest

{

payload: {

// base handle in the request

baseHandle: string

// expiration block number for this payload

expiration: number

}

// Raw encodedPayload is scale encoded of payload in hex format

encodedPayload: string

}

#/components/schemas/KeysRequestPayloadDto

{

// MSA Id of the user requesting the new key

msaId: string

// expiration block number for this payload

expiration: number

// newPublicKey in hex format

newPublicKey: string

}

#/components/schemas/KeysRequestDto

{

// msaOwnerAddress representing the target of this request

msaOwnerAddress: string

// msaOwnerSignature is the signature by msa owner

msaOwnerSignature: string

// newKeyOwnerSignature is the signature with new key

newKeyOwnerSignature: string

payload: {

// MSA Id of the user requesting the new key

msaId: string

// expiration block number for this payload

expiration: number

// newPublicKey in hex format

newPublicKey: string

}

}

#/components/schemas/KeysResponse

{

msaKeys: {

}

}

#/components/schemas/ItemActionType

{

"type": "string",

"enum": [

"ADD_ITEM",

"DELETE_ITEM"

],

"description": "Action Item type"

}

#/components/schemas/ItemActionDto

{

// Action Item type

type: #/components/schemas/ItemActionType

// encodedPayload to be added

encodedPayload?: string

// index of the item to be deleted

index?: number

}

#/components/schemas/ItemizedSignaturePayloadDto

{

actions: {

// Action Item type

type: #/components/schemas/ItemActionType

// encodedPayload to be added

encodedPayload?: string

// index of the item to be deleted

index?: number

}[]

// schemaId related to the payload

schemaId: number

// targetHash related to the stateful storage

targetHash: number

// The block number at which the signed proof will expire

expiration: number

}

#/components/schemas/AddNewPublicKeyAgreementPayloadRequest

{

payload: {

actions: {

// Action Item type

type: #/components/schemas/ItemActionType

// encodedPayload to be added

encodedPayload?: string

// index of the item to be deleted

index?: number

}[]

// schemaId related to the payload

schemaId: number

// targetHash related to the stateful storage

targetHash: number

// The block number at which the signed proof will expire

expiration: number

}

// Raw encodedPayload to be signed

encodedPayload: string

}

#/components/schemas/AddNewPublicKeyAgreementRequestDto

{

// AccountId in hex or SS58 format

accountId: string

payload: {

actions: {

// Action Item type

type: #/components/schemas/ItemActionType

// encodedPayload to be added

encodedPayload?: string

// index of the item to be deleted

index?: number

}[]

// schemaId related to the payload

schemaId: number

// targetHash related to the stateful storage

targetHash: number

// The block number at which the signed proof will expire

expiration: number

}

// proof is the signature for the payload

proof: string

}

#/components/schemas/UpsertedPageDto

{

// Schema id pertaining to this storage

schemaId: number

// Page id of this storage

pageId: number

// Hash of targeted page

targetHash: number

// The block number at which the signed proof will expire

expiration: number

payload: string

}

#/components/schemas/UpsertPagePayloadDto

{

// AccountId in hex or SS58 format that signed the payload

accountId: string

// The signature of the payload

signature: string

// The payload that `signature` signed

payload: #/components/schemas/UpsertedPageDto

}

#/components/schemas/IcsPublishAllRequestDto

{

addIcsPublicKeyPayload: {

// AccountId in hex or SS58 format

accountId: string

payload: {

actions: {

// Action Item type

type: #/components/schemas/ItemActionType

// encodedPayload to be added

encodedPayload?: string

// index of the item to be deleted

index?: number

}[]

// schemaId related to the payload

schemaId: number

// targetHash related to the stateful storage

targetHash: number

// The block number at which the signed proof will expire

expiration: number

}

// proof is the signature for the payload

proof: string

}

addContextGroupPRIDEntryPayload:#/components/schemas/AddNewPublicKeyAgreementRequestDto

addContentGroupMetadataPayload: {

// AccountId in hex or SS58 format that signed the payload

accountId: string

// The signature of the payload

signature: string

// The payload that `signature` signed

payload: #/components/schemas/UpsertedPageDto

}

}

Account Service

Webhooks API Reference

Open Direct API Reference Page

| Method | Path | Description |

|---|---|---|

| POST | /transaction-notify | Notify transaction |

Reference Table

| Name | Path | Description |

|---|---|---|

| TransactionType | #/components/schemas/TransactionType | |

| TxWebhookRspBase | #/components/schemas/TxWebhookRspBase | |

| PublishHandleOpts | #/components/schemas/PublishHandleOpts | |

| SIWFOpts | #/components/schemas/SIWFOpts | |

| PublishKeysOpts | #/components/schemas/PublishKeysOpts | |

| PublishGraphKeysOpts | #/components/schemas/PublishGraphKeysOpts | |

| TxWebhookOpts | #/components/schemas/TxWebhookOpts | |

| PublishHandleWebhookRsp | #/components/schemas/PublishHandleWebhookRsp | |

| SIWFWebhookRsp | #/components/schemas/SIWFWebhookRsp | |

| PublishKeysWebhookRsp | #/components/schemas/PublishKeysWebhookRsp | |

| PublishGraphKeysWebhookRsp | #/components/schemas/PublishGraphKeysWebhookRsp | |

| RetireMsaWebhookRsp | #/components/schemas/RetireMsaWebhookRsp | |

| RevokeDelegationWebhookRsp | #/components/schemas/RevokeDelegationWebhookRsp | |

| TxWebhookRsp | #/components/schemas/TxWebhookRsp |

Path Details

[POST]/transaction-notify

- Summary

Notify transaction

RequestBody

- application/json

{

"oneOf": [

{

"$ref": "#/components/schemas/PublishHandleWebhookRsp"

},

{

"$ref": "#/components/schemas/SIWFWebhookRsp"

},

{

"$ref": "#/components/schemas/PublishKeysWebhookRsp"

},

{

"$ref": "#/components/schemas/PublishGraphKeysWebhookRsp"

},

{

"$ref": "#/components/schemas/RetireMsaWebhookRsp"

},

{

"$ref": "#/components/schemas/RevokeDelegationWebhookRsp"

}

]

}

Responses

-

200 Successful notification

-

400 Bad request

References

#/components/schemas/TransactionType

{

"type": "string",

"enum": [

"CHANGE_HANDLE",

"CREATE_HANDLE",

"SIWF_SIGNUP",

"SIWF_SIGNIN",

"ADD_KEY",

"RETIRE_MSA",

"ADD_PUBLIC_KEY_AGREEMENT",

"REVOKE_DELEGATION"

],

"x-enum-varnames": [

"CHANGE_HANDLE",

"CREATE_HANDLE",

"SIWF_SIGNUP",

"SIWF_SIGNIN",

"ADD_KEY",

"RETIRE_MSA",

"ADD_PUBLIC_KEY_AGREEMENT",

"REVOKE_DELEGATION"

]

}

#/components/schemas/TxWebhookRspBase

{

providerId: string

referenceId: string

msaId: string

transactionType?: enum[CHANGE_HANDLE, CREATE_HANDLE, SIWF_SIGNUP, SIWF_SIGNIN, ADD_KEY, RETIRE_MSA, ADD_PUBLIC_KEY_AGREEMENT, REVOKE_DELEGATION]

}

#/components/schemas/PublishHandleOpts

{

handle: string

}

#/components/schemas/SIWFOpts

{

handle: string

accountId: string

}

#/components/schemas/PublishKeysOpts

{

newPublicKey: string

}

#/components/schemas/PublishGraphKeysOpts

{

schemaId: string

}

#/components/schemas/TxWebhookOpts

{

}

#/components/schemas/PublishHandleWebhookRsp

{

}

#/components/schemas/SIWFWebhookRsp

{

}

#/components/schemas/PublishKeysWebhookRsp

{

}

#/components/schemas/PublishGraphKeysWebhookRsp

{

}

#/components/schemas/RetireMsaWebhookRsp

{

}

#/components/schemas/RevokeDelegationWebhookRsp

{

}

#/components/schemas/TxWebhookRsp

{

"oneOf": [

{

"$ref": "#/components/schemas/PublishHandleWebhookRsp"

},

{

"$ref": "#/components/schemas/SIWFWebhookRsp"

},

{

"$ref": "#/components/schemas/PublishKeysWebhookRsp"

},

{

"$ref": "#/components/schemas/PublishGraphKeysWebhookRsp"

},

{

"$ref": "#/components/schemas/RetireMsaWebhookRsp"

},

{

"$ref": "#/components/schemas/RevokeDelegationWebhookRsp"

}

]

}

Content Publishing Service

The Content Publishing Service allows users to create, post, and manage content on the Frequency network. It supports various content types such as text, images, and videos.

API Reference

Configuration

ℹ️ Feel free to adjust your environment variables to taste. This application recognizes the following environment variables:

| Name | Description | Range/Type | Required? | Default |

|---|---|---|---|---|

API_BODY_JSON_LIMIT | Api json body size limit in string (some examples: 100kb or 5mb or etc) | string | 1mb | |

API_PORT | HTTP port that the application listens on | 1025 - 65535 | 3000 | |

API_TIMEOUT_MS | Overall API timeout limit in milliseconds. This is the maximum time allowed for any API request to complete. Any HTTP_RESPONSE_TIMEOUT_MS value must be less than this value | > 0 | 30000 | |

ASSET_EXPIRATION_INTERVAL_SECONDS | Number of seconds to keep completed asset entries in the cache before expiring them | > 0 | Y | |

ASSET_UPLOAD_VERIFICATION_DELAY_SECONDS | Base delay in seconds used for exponential backoff while waiting for uploaded assets to be verified available before publishing a content notice | >= 0 | Y | |

BATCH_INTERVAL_SECONDS | Number of seconds between publishing batches. This is so that the service waits a reasonable amount of time for additional content to publish before submitting a batch--it represents a trade-off between maximum batch fullness and minimal wait time for content | > 0 | Y | |

BATCH_MAX_COUNT | Maximum number of items that can be submitted in a single batch | > 0 | Y | |

CACHE_KEY_PREFIX | Prefix to use for Redis cache keys | string | Y | |

CAPACITY_LIMIT | Maximum amount of provider capacity this app is allowed to use (per epoch) type: 'percentage' 'amount' value: number (may be percentage, ie '80', or absolute amount of capacity) | JSON (example) | Y | |

FILE_UPLOAD_COUNT_LIMIT | Max number of files to be able to upload at the same time via one upload call | > 0 | Y | |

FILE_UPLOAD_MAX_SIZE_IN_BYTES | Maximum size (in bytes) allowed for each uploaded file. This limit applies to individual files in both single and batch uploads. | > 0 | Y | |

FREQUENCY_API_WS_URL | Blockchain API Websocket URL | ws(s): URL | Y | |

FREQUENCY_TIMEOUT_SECS | Frequency chain connection timeout limit; app will terminate if disconnected longer | integer | 10 | |

HTTP_RESPONSE_TIMEOUT_MS | Timeout in milliseconds to wait for a response as part of a request to an HTTP endpoint. Must be less than API_TIMEOUT_MS | > 0 and < API_TIMEOUT_MS | 3000 | |

IPFS_BASIC_AUTH_SECRET | If using Infura, put auth token here, or leave blank for Kubo RPC | string | blank | |

IPFS_BASIC_AUTH_USER | If using Infura, put Project ID here, or leave blank for Kubo RPC | string | blank | |

IPFS_ENDPOINT | URL to IPFS endpoint | URL | Y | |

IPFS_GATEWAY_URL | IPFS gateway URL '[CID]' is a token that will be replaced with an actual content ID | URL template | Y | |

PRETTY | Whether logs should be pretty-printed on one line or multiple lines, or plain JSON | true | false | compact | N | false |